View Winners →

View Winners →

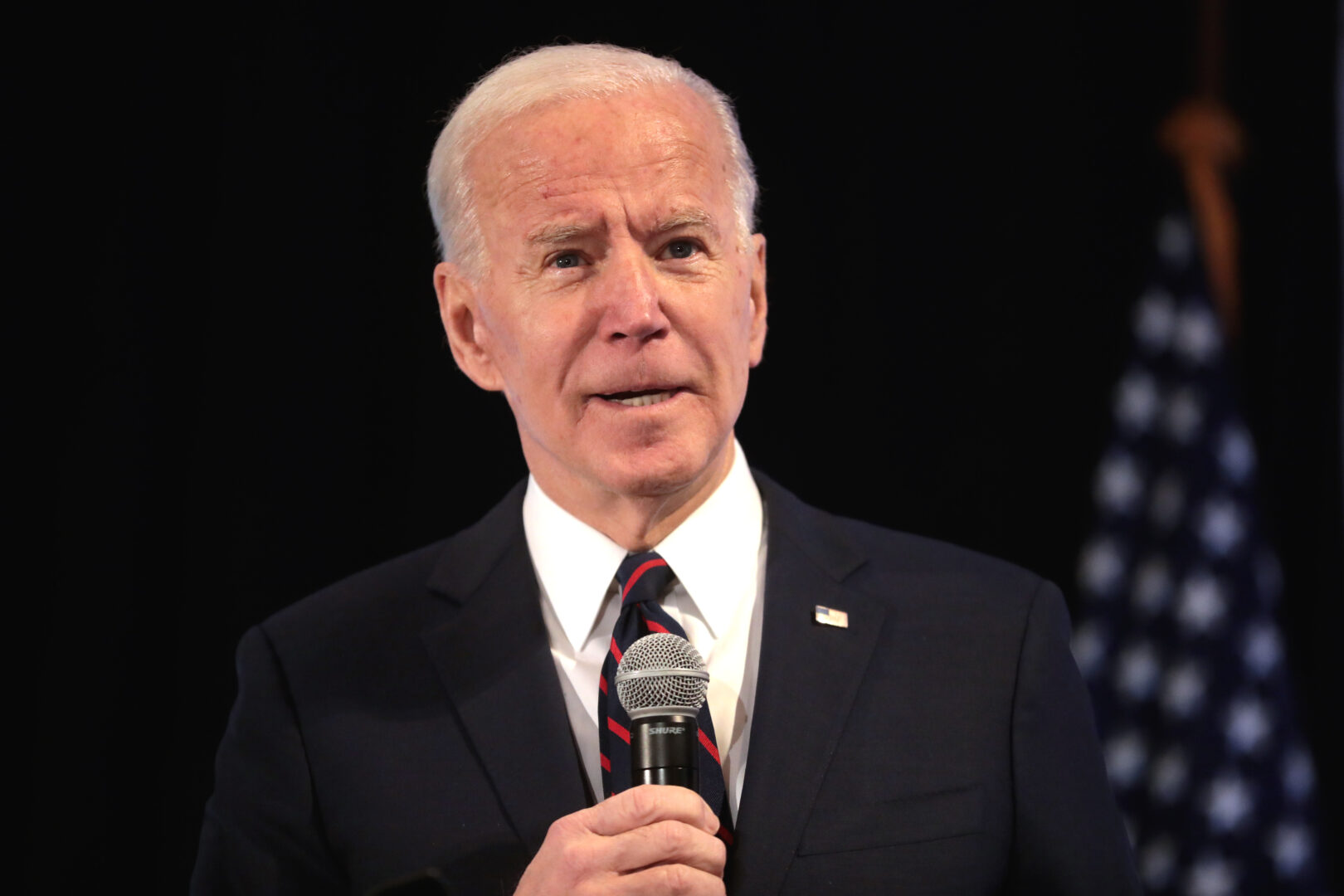

In a pivotal meeting on Friday, leading US artificial intelligence companies are set to publicly commit to improving safeguards for cutting-edge technology at the request of the Biden administration, sources shared. Tech giants like Alphabet Inc.’s Google, Microsoft Corp., and OpenAI are among those pledging to responsibly develop and deploy AI, a measure prompted by a White House warning on the potential for harm if this technology remains unchecked.

The commitment, however, is entirely voluntary, offering a stark illustration of the limitations President Joe Biden faces in directing the course of sophisticated AI models and averting potential misuse. Despite lawmakers’ efforts to better comprehend AI through several information sessions, the prospect of consensus on regulation remains elusive. The lack of concrete legislation underlines the urgency of private companies’ voluntary safety commitments.

The White House, represented by Chief of Staff Jeff Zients, acknowledges the slow-moving nature of the regulatory process and is pressing for quicker action. “We cannot afford to wait a year or two,” Zients admitted during a podcast discussion last month.

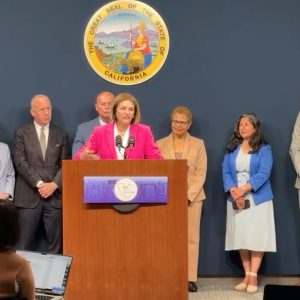

In anticipation of an alignment with the Biden Administration’s call, other leading tech companies have planned their commitments in line with a May meeting with Vice President Kamala Harris. However, these commitments are dwarfed by the stride of AI advancements propelled alternately by corporate competition and the fear of Chinese domination in innovation.

While the fear of a Chinese takeover of AI development is palpable, there is once again a place where the US is trailing: Europe. The European Union has taken a step forward with its AI Act, an advancement much ahead of the US Congress’s nascent regulatory initiatives for AI. Recognizing the need for a preliminary agreement from AI companies before formal legislation, the European Union’s internal market commissioner, Thierry Breton, has been advocating for an “AI Pact”.

Yet, the United States has made progress. After a meeting with Vice President Kamala Harris, executives from tech behemoths like Microsoft, Alphabet, and OpenAI, among others, agreed to uphold certain safeguards. These safeguards aim to ensure that their AI products remain safe and secure, and any harms caused by AI-generated content do not get shielded from liability by claiming Section 230 protections. Another provision requests political ads to be transparent about the use of generative AI.

Moreover, there are growing movements towards prioritizing research assessing societal risks carried by AI, including harmful bias and privacy protection. Leveraging independent experts to pressure test AI systems for vulnerabilities before public release has also been suggested, and firms have even committed to sharing details about managing AI risks with their industry peers and governments.

The White House seeks to foster a bipartisan push for legislation encompassing responsible AI innovation and is preparing an executive order regarding the issue. A government official described this as a “high priority” for Biden and his team. Meanwhile, Amazon Inc., Meta Platforms Inc., along with Open AI, Anthropic, and Inflection have already pledged to follow a set of voluntary guidelines to mitigate the associated risks with artificial intelligence.

The voluntary commitments from these companies indicate a significant step in the Biden administration’s attempts to regulate AI technology. However, critics caution that these same companies have had a mixed track record when it comes to keeping their promises on safety and security. As we move forward in the era of AI, this balance between innovation, regulation, and social responsibility will continue to be a challenging, but necessary, pursuit.